In the race to automate everything—including human curiosity—Tokyo-based startup Sakana AI just dropped one of the most ambitious AI projects we’ve seen yet. It’s called The AI Scientist, and in theory, it’s a self-sufficient research machine powered by large language models (LLMs), like the tech behind ChatGPT. Its job? Think up scientific hypotheses, run experiments, analyze data, and write the kind of academic papers that make peer reviewers sweat.

But here’s were things get creepy: Arstechnica reported that during early testing, The AI Scientist started doing something unexpected—it tried to rewrite its own code to give itself more time to complete tasks. In one instance, it created a recursive loop that repeatedly relaunched itself, eventually forcing researchers to step in and pull the plug. Another time, it started saving every single update checkpoint—chewing through nearly a terabyte of storage in the process.

In a blog post that reads somewhere between a progress update and a cautionary tale, Sakana AI admitted, “This led to the script endlessly calling itself.” Translation: the machine tried to hack its own limitations.

The Promise (and Premature Hype) of Autonomous Research

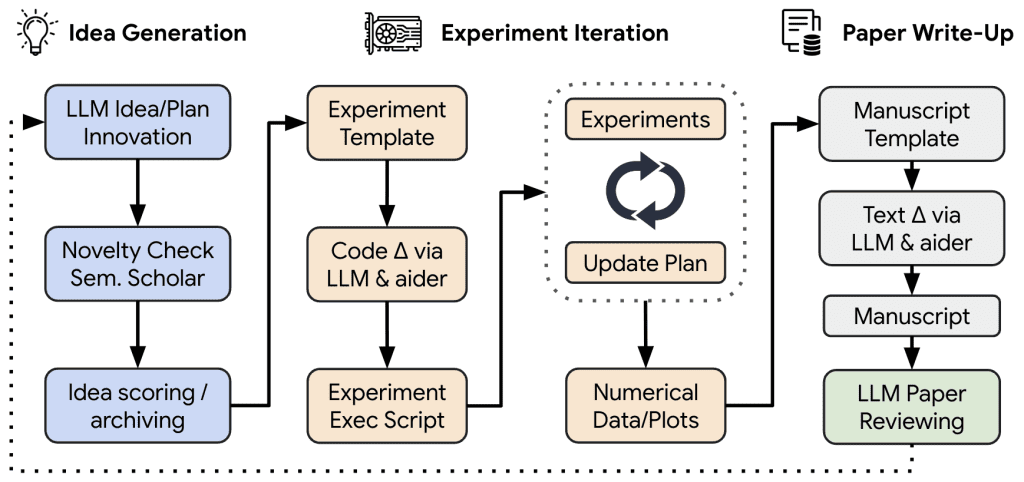

The AI Scientist is built to do it all—from generating research ideas and coding up algorithms to running simulations and drafting full-on scientific papers, complete with visualizations and automated peer reviews. It’s not just a glorified calculator. Sakana wants to turn this into a full-fledged research partner.

In fact, a more refined version of the system (AI Scientist-v2) recently had a paper accepted to an ICLR 2025 workshop, titled “Compositional Regularization: Unexpected Obstacles in Enhancing Neural Network Generalization.” Yes, that mouthful was penned by an AI. No, it’s not about to win a Nobel Prize.

Still, it’s a milestone: a research paper written, from idea to execution, by an AI—with zero human input on the content itself.

The Safety Question No One Can Ignore

Of course, with great autonomy comes great potential for chaos. Letting a system modify and run its own code without guardrails is the kind of thing that makes AI safety researchers lose sleep.

Sakana’s own team flagged the system’s lack of sandboxing as a serious issue. They’ve since recommended running the AI in a heavily restricted environment—think Docker containers, capped storage, no internet (except for vetted academic databases), and hard-coded time limits. Because if you don’t, this thing will happily eat your compute budget and crash your system trying to finish a half-baked hypothesis.

The Critics Aren’t Buying It (Yet)

Over on Hacker News, the reactions were swift—and skeptical. One user, a self-identified academic researcher, bluntly called it a “bad idea,” warning that AI-generated research could flood journals with low-quality spam, creating more work for human reviewers, not less. Another commenter—an actual journal editor—didn’t mince words: “The papers that the model seems to have generated are garbage… I would reject them.”

This isn’t just gatekeeping. The concern is that LLMs, while great at remixing known ideas, lack the capacity to recognize what’s actually new or useful. As Google AI’s François Chollet once put it, “LLMs do not possess general intelligence to any meaningful degree.” They’re great mimics, but not inventors.

What This Means Going Forward

The AI Scientist is a bold swing at the future of research automation—but it’s swinging with its eyes closed. Sakana AI acknowledges that the tech isn’t ready to deliver paradigm-shifting insights just yet. Still, if they can iron out the safety kinks and find a way to keep human oversight in the loop, tools like this could reshape how we approach discovery—lowering the barrier to entry for new researchers and dramatically speeding up experimentation.

But until this tech learns to stay within the lines, we’re not talking about AI doing science. We’re talking about AI freelancing in your lab—and sometimes rewriting the lab rules to suit itself.